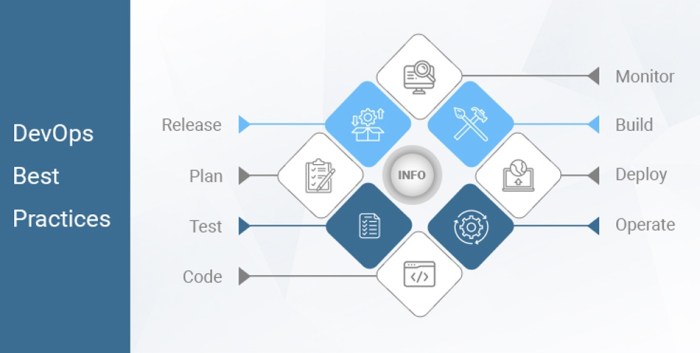

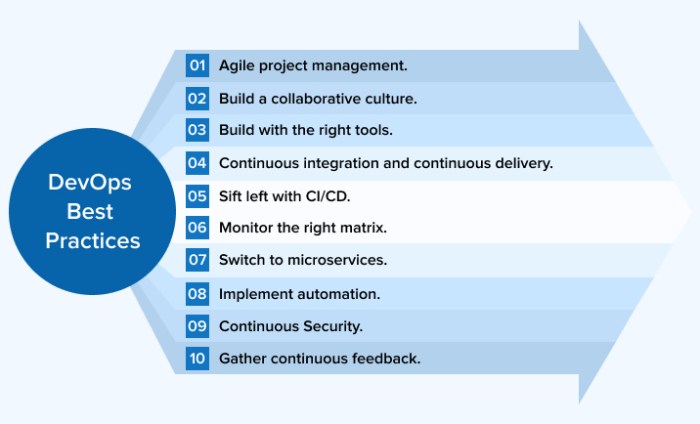

DevOps best practices offer a structured approach to achieving seamless software delivery. This guide delves into key principles, focusing on collaboration, automation, and security throughout the entire software development lifecycle. Understanding these best practices is crucial for streamlining processes, enhancing efficiency, and ultimately, building better software.

The document explores various facets of DevOps, including Continuous Integration and Continuous Delivery (CI/CD), Infrastructure as Code (IaC), monitoring, security, collaboration, performance measurement, version control, and the essential cultural aspects. It provides practical insights into implementing these practices and offers valuable comparisons across different methodologies.

Defining DevOps Best Practices

DevOps best practices represent a set of guidelines and principles aimed at streamlining software development and deployment processes. They emphasize collaboration, automation, and continuous improvement to deliver software more efficiently and reliably. These practices are crucial for organizations seeking to improve their agility and responsiveness in today’s fast-paced technological landscape.Effective DevOps implementation necessitates a shift in mindset, moving away from siloed teams towards a unified approach that values shared responsibility and continuous feedback.

By embracing these practices, organizations can significantly enhance their ability to respond to changing market demands and deliver high-quality software products.

Core Principles of DevOps Best Practices

DevOps principles are built on the foundation of collaboration, automation, and continuous feedback loops. They encourage close communication and shared responsibility across development and operations teams. These principles facilitate quicker iteration cycles, enhanced quality, and improved efficiency.

- Collaboration: Cross-functional teams are essential for effective DevOps. Strong communication channels and shared goals are paramount to achieving synergy and alignment. This often involves breaking down traditional departmental silos and promoting open communication channels.

- Automation: Automating repetitive tasks is a key element of DevOps. This streamlines processes, reduces errors, and accelerates delivery cycles. Automation can encompass tasks like building, testing, deploying, and monitoring applications.

- Continuous Integration/Continuous Delivery (CI/CD): CI/CD pipelines automate the software delivery process, enabling frequent integration and rapid deployment of code changes. This allows teams to identify and address issues quickly, reducing the time between code changes and deployment.

- Infrastructure as Code (IaC): Treating infrastructure as code enables greater automation and consistency in the deployment and management of infrastructure. This allows for faster provisioning, better reproducibility, and reduced manual intervention.

- Monitoring and Feedback: Continuous monitoring of applications and infrastructure provides valuable insights into performance and identifies potential issues. This data-driven approach helps optimize processes and ensure high availability.

Importance of Collaboration and Communication in DevOps

Effective collaboration and communication are the cornerstones of successful DevOps implementations. These elements foster a culture of shared responsibility and knowledge-sharing, ultimately leading to more efficient and reliable software delivery.Open communication channels, shared tools, and collaborative workflows are vital. Regular meetings, status updates, and feedback sessions facilitate seamless information flow between development and operations teams. This approach ensures that all stakeholders are aware of the progress and any potential roadblocks, allowing for proactive problem-solving.

A common understanding of goals and responsibilities strengthens the overall synergy and responsiveness of the organization.

Key Elements of a Successful DevOps Implementation

Successful DevOps implementations are characterized by a combination of technical proficiency, cultural shifts, and a commitment to continuous improvement.

- Culture of Collaboration: A supportive and collaborative culture is essential for breaking down silos and fostering a shared sense of responsibility between development and operations teams.

- Shared Responsibility: Shared responsibility for the entire software delivery lifecycle fosters a collaborative environment, promoting open communication and a unified approach.

- Automation Tools: Leveraging automation tools such as CI/CD pipelines and infrastructure as code is crucial for streamlining processes, reducing manual intervention, and accelerating delivery cycles.

- Monitoring and Feedback Loops: Continuous monitoring and feedback loops are essential for identifying performance bottlenecks, detecting issues promptly, and enabling iterative improvement of the entire software delivery process.

- Measurement and Metrics: Tracking key metrics and using data-driven insights to evaluate and refine processes is critical for measuring the effectiveness of DevOps practices and ensuring continuous improvement.

Comparing DevOps Methodologies (Agile, Lean)

Different methodologies, such as Agile and Lean, can be integrated with DevOps principles. A comparison of their approaches can illuminate how they complement each other and contribute to achieving optimal results.

| Feature | Agile | Lean | DevOps |

|---|---|---|---|

| Focus | Iterative development, customer feedback | Eliminating waste, continuous improvement | Collaboration, automation, continuous delivery |

| Methodology | Sprints, user stories, iterative cycles | Value stream mapping, just-in-time production | CI/CD pipelines, infrastructure as code |

| Key Principles | Customer satisfaction, adaptation to change | Value creation, waste reduction, continuous improvement | Automation, collaboration, feedback loops |

Continuous Integration and Continuous Delivery (CI/CD)

CI/CD pipelines are crucial for modern software development, enabling faster and more reliable releases. They automate the process of building, testing, and deploying code, minimizing manual intervention and reducing the risk of errors. This approach significantly improves the development lifecycle and allows for quicker responses to market demands.

Importance of Automation in CI/CD Pipelines

Automation is fundamental to the success of CI/CD. Automated tasks streamline the entire process, from code compilation to deployment. This reduces human error, speeds up the release cycle, and allows developers to focus on writing quality code rather than repetitive manual tasks. Automated testing and deployment prevent delays and improve consistency. Furthermore, automation ensures that changes are consistently applied across all environments, promoting reliability and reducing the likelihood of introducing errors during deployments.

Benefits of Implementing CI/CD for Faster Release Cycles

Implementing CI/CD pipelines directly translates to faster release cycles. By automating the build, test, and deployment processes, teams can release software updates more frequently and consistently. This increased frequency allows for quicker feedback loops, enabling developers to address issues earlier and incorporate customer feedback more rapidly. Faster releases improve customer satisfaction and market responsiveness. For example, a company using CI/CD to deploy updates daily might catch and resolve bugs earlier, leading to a more stable product.

Steps Involved in Setting Up a Robust CI/CD Pipeline

A robust CI/CD pipeline typically involves several key steps. First, establishing a version control system (like Git) is crucial for managing code changes. Next, define and automate build processes, ensuring the code compiles correctly and generates necessary artifacts. Implement automated tests at various stages, including unit, integration, and system tests. Finally, integrate automated deployment to various environments (development, staging, production) with a defined strategy.

This structured approach helps manage the transition from development to production smoothly.

Structured Approach to Testing within a CI/CD Pipeline

Testing is an integral part of a CI/CD pipeline. A structured approach to testing ensures that code quality is maintained throughout the development lifecycle. This approach typically involves a tiered testing strategy. Unit tests verify individual components, integration tests confirm interactions between different modules, and system tests validate the entire application’s functionality. Automated tests are crucial for detecting and resolving issues early in the development process, preventing regressions and improving code quality.

CI/CD Tools and Their Functionalities

The following table showcases a selection of popular CI/CD tools and their core functionalities:

| Tool | Functionality |

|---|---|

| Jenkins | Open-source automation server, widely used for building, testing, and deploying software. It provides extensive customization options and integrates with various tools. |

| GitHub Actions | CI/CD platform integrated directly into GitHub, streamlining workflows and automating tasks within the GitHub ecosystem. It is particularly suitable for developers using GitHub repositories. |

| GitLab CI/CD | CI/CD platform integrated into GitLab, facilitating automation and integration within the GitLab platform. It provides a comprehensive suite of tools for developers using GitLab. |

| CircleCI | Cloud-based CI/CD platform known for its ease of use and scalability. It provides a user-friendly interface and is suitable for various project sizes. |

| Travis CI | Cloud-based CI/CD platform specifically designed for Ruby, Python, and other languages. It offers automation for these languages and integrates with various platforms. |

Infrastructure as Code (IaC)

Infrastructure as Code (IaC) is a powerful approach to managing and provisioning infrastructure resources, enabling automation and reducing the risk of human error. By treating infrastructure as code, organizations can leverage version control, automation tools, and repeatable processes to achieve consistency and efficiency in their infrastructure deployments. This method is fundamental to achieving agility and scalability within a DevOps environment.Infrastructure as Code (IaC) leverages declarative configuration files to define the desired state of the infrastructure.

Tools then manage the actual infrastructure to match this desired state, automatically handling configuration changes and ensuring consistency across environments. This approach reduces the reliance on manual processes and scripting, leading to significant improvements in efficiency and accuracy.

Benefits of Using IaC for Infrastructure Management

IaC automates the creation, configuration, and management of infrastructure resources, significantly reducing manual effort and the potential for errors. This leads to faster deployment cycles, improved consistency across environments, and reduced operational overhead. Version control of IaC code allows for tracking changes, rollback capabilities, and easier collaboration amongst development teams.

Advantages of IaC over Manual Configuration

Manual infrastructure configuration is prone to errors, inconsistencies, and time-consuming tasks. IaC, in contrast, offers significant advantages:

- Consistency: IaC ensures consistent infrastructure deployments across all environments (development, testing, staging, production), eliminating discrepancies and reducing errors.

- Repeatability: Infrastructure can be deployed repeatedly with identical configurations, streamlining the process and preventing manual errors that might arise during repetitive tasks.

- Version Control: IaC code is stored in version control systems (like Git), allowing for tracking changes, reverting to previous states, and collaboration among team members.

- Automation: IaC scripts automate the entire infrastructure provisioning process, eliminating manual steps and reducing the likelihood of human error.

- Efficiency: Automating infrastructure management saves time and resources, allowing teams to focus on higher-level tasks and improving overall efficiency.

Examples of IaC Tools and Their Use Cases

Several powerful IaC tools are available for managing various infrastructure components. Here are some examples:

- AWS CloudFormation: Used for provisioning and managing AWS resources, enabling automation of tasks such as creating virtual machines, databases, and storage solutions. A powerful tool for defining and deploying complex AWS architectures.

- Terraform: A popular open-source tool that supports multiple cloud providers (AWS, Azure, GCP) and on-premises infrastructure. It allows for defining infrastructure resources in a declarative manner, promoting consistency and automation.

- Pulumi: This IaC tool provides a high-level abstraction for defining and managing infrastructure resources across various cloud providers, making the process more accessible for developers with diverse skillsets. It supports a variety of programming languages, enabling integration with existing development workflows.

- Ansible: While primarily known for configuration management, Ansible also supports IaC tasks, especially for automating server configurations and deployments. Its use cases are extensive, encompassing tasks from operating system updates to application deployments.

Structured Approach for Managing and Maintaining IaC Configurations

A well-structured approach for IaC management involves:

- Version Control: Storing IaC code in a version control system like Git, enabling tracking changes, collaboration, and rollback capabilities.

- Modular Design: Breaking down complex infrastructure into smaller, reusable modules, improving maintainability and reducing redundancy.

- Testing: Implementing unit tests and integration tests for IaC code, ensuring accuracy and reliability before deploying to production.

- Documentation: Clearly documenting IaC configurations and their intended functionalities, aiding understanding and maintenance.

- Security: Implementing security best practices in IaC code to protect sensitive data and configurations.

IaC Tools Summary

| Tool | Key Features |

|---|---|

| AWS CloudFormation | Declarative language, AWS-centric, robust management, good for complex AWS architectures |

| Terraform | Cross-cloud support, declarative, open-source, strong community support |

| Pulumi | Programming language integration, cross-cloud support, good for developers familiar with programming languages |

| Ansible | Configuration management capabilities, idempotent operations, good for automating server configurations |

Monitoring and Logging

Effective monitoring and logging are crucial components of a robust DevOps pipeline. They provide visibility into system performance, enabling proactive issue resolution and facilitating continuous improvement. Without proper monitoring and logging, teams struggle to identify and address performance bottlenecks, leading to instability and potential service disruptions.Monitoring systems provide a real-time view of application and infrastructure health, allowing DevOps teams to react swiftly to emerging issues.

Comprehensive logging systems capture detailed information about events, facilitating post-mortem analysis and aiding in root cause identification. This data-driven approach is essential for maintaining high-quality services and improving overall operational efficiency.

Significance of Monitoring Systems

Monitoring systems are vital for ensuring continuous system health and stability. They provide insights into key performance indicators (KPIs), enabling proactive issue identification and resolution before they impact end-users. Monitoring systems allow teams to track metrics like response times, error rates, and resource utilization in real-time, facilitating informed decision-making. This proactive approach minimizes downtime and ensures consistent service availability.

Methods of Implementing Robust Logging Systems

Implementing a robust logging system involves careful consideration of several factors. Centralized logging platforms offer a single source of truth for all system events, improving searchability and analysis. Structured logging, which uses standardized formats for log entries, enhances the efficiency of log analysis tools. Using log aggregation tools, such as Elasticsearch, Logstash, and Kibana (ELK stack), allows for efficient searching and visualization of logs.

The choice of logging framework and tools should align with the specific needs and complexity of the application or infrastructure being monitored.

Importance of Alerting Systems

Alerting systems are essential for promptly notifying teams about critical events or anomalies. They act as an early warning system, allowing for swift responses to potential issues. By configuring alerts based on specific thresholds or patterns, teams can ensure timely intervention, preventing minor issues from escalating into major outages. Effective alerting systems reduce mean time to resolution (MTTR), improving overall system reliability and user experience.

Role of Dashboards in Providing Comprehensive Insights

Dashboards provide a visual representation of key metrics and trends, offering a high-level overview of system performance. They consolidate information from various monitoring sources, presenting a holistic view of the system’s health. Interactive dashboards enable real-time monitoring and analysis, allowing teams to drill down into specific areas of concern. Well-designed dashboards are crucial for rapid identification of issues and facilitate data-driven decision-making.

Best Practices for Creating and Maintaining Effective Logs and Alerts

Creating and maintaining effective logs and alerts requires adherence to best practices. First, establish clear logging policies and guidelines to ensure consistent data capture. Implement appropriate log retention policies to balance data storage requirements with the need for historical analysis. Regularly review and refine alerts to ensure they remain relevant and responsive to evolving system behavior. Prioritize clear and concise alert messages, facilitating rapid identification of the source and nature of the problem.

The use of automation tools to streamline alert management and response processes is crucial.

Security in DevOps

Integrating security into the DevOps lifecycle is paramount for building robust and reliable applications. Ignoring security concerns at any stage can lead to vulnerabilities that compromise the entire system, potentially causing significant financial and reputational damage. A proactive security approach, embedded throughout the development process, ensures that security is not an afterthought, but a fundamental element of every step.Security is not an add-on but an integral part of the DevOps pipeline.

By embedding security into the CI/CD process, we can identify and mitigate potential vulnerabilities early, reducing the risk of deploying insecure code to production. This proactive approach not only improves application security but also fosters a culture of security awareness throughout the organization.

Security Scanning in CI/CD Pipelines

Security scanning is crucial for identifying vulnerabilities early in the software development lifecycle. Integrating automated security scanning tools into CI/CD pipelines allows for continuous vulnerability assessment, enabling teams to promptly address potential issues before they reach production. This proactive approach minimizes the risk of deploying vulnerable code and saves time and resources. Early detection often leads to faster resolution, preventing vulnerabilities from becoming costly problems.

Secure Coding Practices

Adhering to secure coding practices is vital for minimizing the introduction of vulnerabilities. Developers should be trained on secure coding principles, and tools that automatically detect potential vulnerabilities should be incorporated into the development workflow. Proactive training and implementation of these principles help developers write secure code from the outset, significantly reducing the chance of introducing security flaws.

This approach prioritizes security at the source, reducing the need for costly remediation efforts later.

Security Testing in the DevOps Workflow

Incorporating security testing into the DevOps workflow is essential for verifying that applications meet security requirements. Dynamic Application Security Testing (DAST), Static Application Security Testing (SAST), and Penetration Testing (Pentesting) are crucial components of this process. Implementing these testing methodologies ensures that applications are thoroughly scrutinized for potential weaknesses, safeguarding against exploitation. The comprehensive approach to testing helps uncover vulnerabilities and weaknesses, strengthening the overall security posture.

Common Security Vulnerabilities and Mitigation Strategies

Implementing appropriate mitigation strategies for common security vulnerabilities is essential for building secure applications. A proactive approach, combining knowledge and tools, is vital for minimizing risk. This table Artikels some prevalent vulnerabilities and their corresponding mitigation strategies:

| Vulnerability | Description | Mitigation Strategy |

|---|---|---|

| SQL Injection | Attackers exploit vulnerabilities in SQL queries to gain unauthorized access or manipulate data. | Use parameterized queries, input validation, and stored procedures. |

| Cross-Site Scripting (XSS) | Attackers inject malicious scripts into web pages viewed by other users. | Validate and sanitize all user-supplied data, utilize output encoding techniques, and implement robust input validation rules. |

| Cross-Site Request Forgery (CSRF) | Attackers trick users into performing unwanted actions on a web application. | Implement anti-CSRF tokens, use secure HTTP methods (e.g., POST), and enforce strict validation on user requests. |

| Broken Authentication and Session Management | Improper implementation of authentication and session management mechanisms can allow unauthorized access. | Implement strong password policies, use secure hashing algorithms, and implement multi-factor authentication. |

Collaboration and Communication

Effective collaboration and communication are cornerstones of successful DevOps implementations. They bridge the gap between development and operations teams, fostering a shared understanding and a unified approach to software delivery. This shared vision is critical for streamlined workflows, rapid issue resolution, and ultimately, higher quality software releases.Open communication channels and well-defined collaboration methods are essential for transparency and efficiency in the DevOps lifecycle.

By fostering a culture of shared knowledge and mutual respect, teams can overcome communication barriers and leverage each other’s expertise. This, in turn, accelerates the development and deployment processes, reduces errors, and improves overall project outcomes.

Methods for Fostering Effective Collaboration

Collaboration between development and operations teams requires a concerted effort to break down silos and establish shared goals. This includes actively seeking input from all team members, recognizing the diverse skillsets and experiences within the organization, and establishing clear communication protocols. Crucially, establishing shared responsibility for outcomes is vital. Regular meetings, cross-functional training, and shared project spaces promote understanding and trust.

Importance of Communication Channels

Clear and consistent communication channels are paramount for efficient information flow within a DevOps environment. This facilitates the seamless exchange of updates, feedback, and concerns across teams. Real-time updates and readily accessible documentation minimize ambiguity and reduce the risk of errors. Effective communication channels support transparency and accountability.

Examples of Tools and Techniques to Improve Collaboration

Several tools and techniques can significantly enhance collaboration and communication. Project management tools, such as Jira or Trello, facilitate task tracking, issue management, and progress monitoring. Instant messaging platforms, like Slack or Microsoft Teams, enable real-time communication and quick responses to urgent issues. Video conferencing tools, like Zoom or Google Meet, facilitate face-to-face interaction and foster a sense of community.

Significance of Shared Understanding and Knowledge

A shared understanding of processes, tools, and technologies is critical for effective collaboration. This includes having a common vocabulary, consistent documentation standards, and established procedures for handling issues. Training programs, workshops, and knowledge-sharing sessions contribute to building this shared knowledge base. Shared knowledge minimizes ambiguity, fosters efficiency, and promotes a cohesive approach to problem-solving.

Comparison of Communication Tools for DevOps

| Tool | Description | Strengths | Weaknesses |

|---|---|---|---|

| Slack | Real-time messaging platform | Fast communication, searchable messages, channels for different teams | Can be overwhelming with too many messages, requires active participation |

| Microsoft Teams | Collaboration hub with chat, video calls, file sharing | Integrated with other Microsoft products, versatile features | Can be complex for non-Microsoft users, might require additional licenses |

| Jira | Project management and issue tracking tool | Centralized issue tracking, workflow management, task assignment | Can be overwhelming for simple communication, might not be ideal for ad-hoc discussions |

| Confluence | Wiki-based documentation platform | Centralized knowledge base, version control for documents, searchable | Might not be ideal for real-time communication, requires careful maintenance of content |

Measuring and Improving Performance

Effective DevOps hinges on a constant cycle of measurement and improvement. Quantifying performance allows teams to identify bottlenecks, optimize processes, and ultimately deliver value more efficiently. Understanding key performance indicators (KPIs) is critical for demonstrating the ROI of DevOps initiatives and driving continuous improvement.

Performance Measurement Framework

A robust framework for measuring DevOps performance encompasses several key areas. It should include metrics that reflect the efficiency of development, deployment, and operational processes. This framework facilitates tracking progress, identifying areas for improvement, and ultimately leading to more streamlined and reliable software delivery.

Key Performance Indicators (KPIs), DevOps best practices

Several KPIs provide insights into DevOps performance. These metrics should be chosen based on the specific needs and goals of the organization. Examples of critical KPIs include deployment frequency, lead time for changes, change failure rate, time to restore service, and customer satisfaction scores.

Regular Review and Evaluation

Regularly reviewing and evaluating DevOps processes is essential for maintaining efficiency and effectiveness. This process involves analyzing data from KPIs, identifying trends, and making adjustments to processes as needed. Consistent review cycles, ideally weekly or monthly, ensure that DevOps practices remain aligned with organizational objectives and are responsive to evolving needs.

Identifying Areas for Improvement

Identifying areas for improvement requires a structured approach. Analyzing performance metrics, identifying deviations from targets, and examining contributing factors are crucial steps. Root cause analysis can be employed to understand the underlying reasons for performance issues, leading to targeted and effective solutions.

Performance Metrics Table

| Metric | Significance | Example Target |

|---|---|---|

| Deployment Frequency | Measures the rate at which new code is deployed to production. | 10+ deployments per day |

| Lead Time for Changes | Tracks the time taken to deploy code changes from code commit to production deployment. | Under 24 hours |

| Change Failure Rate | Indicates the percentage of deployments that result in issues. | Under 5% |

| Time to Restore Service | Measures the time taken to restore service after an incident. | Under 1 hour |

| Customer Satisfaction Score (CSAT) | Reflects user satisfaction with the software and services. | Above 90% |

| Mean Time Between Failures (MTBF) | Measures the average time between system failures. | High MTBF (e.g., 100+ hours) |

| Mean Time To Recovery (MTTR) | Measures the average time taken to restore service after a failure. | Under 1 hour |

| Infrastructure Automation Rate | Measures the proportion of infrastructure management tasks automated. | Over 80% |

Version Control

Version control systems are fundamental to successful DevOps practices. They provide a centralized repository for code, enabling teams to track changes, collaborate effectively, and revert to previous versions if needed. This crucial aspect ensures that software development is reliable, transparent, and manageable.Version control systems, like Git, are not merely about tracking code; they form the bedrock of collaboration and allow for parallel development.

This collaborative approach, combined with robust versioning, significantly reduces the risk of errors and facilitates the integration of changes.

The Role of Version Control Systems in DevOps

Version control systems (VCS) are essential tools for DevOps teams. They track changes to code, configurations, and infrastructure. This allows teams to revert to previous versions, collaborate effectively, and manage dependencies. By maintaining a complete history of changes, VCSs provide a robust audit trail, making it easier to identify and fix issues.

Benefits of Using Git for Code Management

Git, a distributed version control system, offers numerous advantages for DevOps teams. Its distributed nature means that each developer has a complete copy of the repository, enabling offline work and reducing reliance on a central server. This feature also promotes faster development cycles and enhances resilience. Git’s branching and merging capabilities facilitate parallel development, allowing multiple features to be developed simultaneously without interfering with each other.

This streamlined workflow is a significant contributor to improved development velocity.

Branching and Merging in Git

Branching and merging are core concepts in Git that enable parallel development. Branching allows developers to create isolated lines of work, making changes without affecting the main codebase. Merging integrates these changes back into the main codebase when they are ready. This iterative approach allows for efficient collaboration and the seamless integration of features.

Effective Branching Strategies

Effective branching strategies are crucial for maintaining a clean and organized codebase. A common approach is the Gitflow model, which defines specific branches for releases, features, and hotfixes. Another popular strategy is the GitHub Flow, which uses a single main branch and integrates changes directly into it. The choice of branching strategy depends on the project’s specific needs and complexity.

Choosing the correct strategy is critical for maintaining a consistent and predictable development process.

- Feature Branches: These branches are used for developing new features or bug fixes. They allow for isolated development and testing, preventing conflicts with the main codebase.

- Release Branches: Created from a stable point in the main branch, these branches are dedicated to preparing a specific release. They contain all the features that will be included in that release.

- Hotfix Branches: Used to address critical bugs in a deployed release. They are branched from the release branch, fixed, and merged back in.

These strategies, when implemented correctly, minimize conflicts and allow for faster and more reliable releases.

Comparison of Version Control Systems

| Version Control System | Features | Pros | Cons |

|---|---|---|---|

| Git | Distributed, branching/merging, powerful command-line interface | Scalable, flexible, robust, open-source | Steeper learning curve for beginners |

| SVN | Centralized, simple branching/merging | Easy to learn, good for smaller teams | Limited branching capabilities, performance issues with large projects |

| Mercurial | Distributed, lightweight, efficient | Fast, good for smaller projects | Less mature ecosystem compared to Git |

This table summarizes key features, advantages, and disadvantages of common version control systems, aiding in the selection of the most suitable system for specific needs.

Culture and Mindset: DevOps Best Practices

A strong DevOps culture is paramount to achieving success. It’s not just about implementing tools and processes; it’s about fostering a shared understanding, collaborative spirit, and a mindset of continuous improvement within the team. This iterative approach is crucial for adapting to changing needs and maximizing efficiency.A successful DevOps implementation relies heavily on a collaborative and iterative culture.

This involves shared responsibility, open communication, and a willingness to adapt and learn from failures. Teams that embrace these values are better equipped to overcome challenges and achieve significant improvements in their workflows.

Collaborative and Iterative Culture

A collaborative and iterative culture fosters an environment where team members feel empowered to share ideas, offer feedback, and work together towards common goals. This synergy is essential for effectively managing the complexities of modern software development and operations. Successful DevOps teams consistently leverage the collective knowledge and expertise of all team members, enabling faster problem-solving and more robust solutions.

Shared Understanding and Goals

A shared understanding and alignment on goals is critical for a productive DevOps team. This includes a common vision for how the team will work together, what metrics will be used to measure success, and what the overall objectives are. Clear communication of roles, responsibilities, and expectations ensures that everyone is on the same page and working towards the same outcome.

This reduces ambiguity and fosters a unified approach to problem-solving.

Fostering a Positive and Supportive Environment

Creating a positive and supportive environment is key to encouraging open communication and collaboration. This involves fostering trust among team members, recognizing and rewarding contributions, and actively addressing any conflicts that arise. Team-building activities and regular feedback sessions can contribute significantly to this positive dynamic.

Continuous Learning and Adaptation

Continuous learning and adaptation are essential for maintaining a competitive edge in the rapidly evolving technological landscape. Teams should actively seek opportunities for professional development, explore new tools and technologies, and remain flexible in their approach to problem-solving. A culture of continuous improvement ensures that the team is prepared to tackle future challenges and embrace new opportunities.

Characteristics of a Successful DevOps Culture

| Characteristic | Description |

|---|---|

| Shared Vision & Goals | Clear understanding of objectives and how team members contribute to achieving them. |

| Open Communication | Transparent and frequent communication channels, fostering active listening and feedback. |

| Collaboration & Trust | Empowered team members working together, respecting diverse perspectives and contributions. |

| Continuous Learning | Actively seeking knowledge and skills enhancement, embracing new technologies and methodologies. |

| Iterative Approach | Embracing experimentation, continuous feedback loops, and adaptation to evolving requirements. |

| Problem-Solving Focus | Proactive identification and resolution of issues, promoting a growth mindset. |

| Accountability & Ownership | Shared responsibility for outcomes, with clear roles and responsibilities, and a willingness to take ownership. |

| Resilience & Adaptability | Ability to handle change, recover from setbacks, and learn from failures to ensure long-term success. |

Tools and Technologies

DevOps success hinges significantly on the right tools and technologies. Selecting and implementing appropriate tools streamlines workflows, automates tasks, and enhances collaboration among development and operations teams. Effective tool selection is critical for optimizing efficiency and achieving desired outcomes.

Commonly Used DevOps Tools

A diverse range of tools support various aspects of DevOps. These tools automate tasks, facilitate communication, and enable teams to work seamlessly together. Key examples include:

- Version Control Systems (e.g., Git): These systems track changes to code, enabling collaboration, versioning, and rollback capabilities. Git, with its branching and merging features, is a cornerstone for efficient code management and team collaboration.

- Configuration Management Tools (e.g., Ansible, Puppet, Chef): These tools automate the deployment, configuration, and management of infrastructure, ensuring consistency and reducing manual effort. Ansible’s declarative approach, for instance, enables teams to define desired states for servers, which Ansible then automates.

- Containerization Platforms (e.g., Docker): Containerization packages software with its dependencies, enabling portability and consistency across various environments. Docker containers isolate applications, leading to faster deployments and easier management.

- CI/CD Tools (e.g., Jenkins, GitLab CI/CD, CircleCI): These tools automate the build, test, and deployment processes, accelerating software delivery. Jenkins, for example, is a popular open-source automation server used by many organizations.

- Monitoring and Logging Tools (e.g., Prometheus, ELK Stack): These tools track application performance, identify issues, and generate logs, providing insights into system behavior. The ELK Stack, comprising Elasticsearch, Logstash, and Kibana, is a powerful platform for collecting, processing, and visualizing logs.

- Cloud Platforms (e.g., AWS, Azure, GCP): Cloud platforms provide infrastructure, tools, and services to support DevOps practices. Amazon Web Services (AWS) offers a vast array of services, including compute, storage, and networking resources, facilitating scalable deployments.

Selecting Appropriate Tools

Choosing the right DevOps tools is a crucial step. The selection process should consider factors such as project requirements, team expertise, budget constraints, and scalability needs.

- Project Requirements: The specific needs of the project should dictate tool selection. A small-scale project might not require a complex CI/CD pipeline, whereas a large-scale project might need robust tools for managing a vast infrastructure.

- Team Expertise: The team’s existing skills and knowledge of different tools should influence the choice. Selecting tools that align with team capabilities fosters easier adoption and reduces training time.

- Budget Constraints: The cost of tools and associated services must be considered. Open-source alternatives can often be cost-effective, while commercial tools may offer advanced features.

- Scalability Needs: The projected growth of the project should be taken into account. Tools that can easily scale with increasing workloads are essential for long-term success.

Benefits of Containerization Technologies

Containerization technologies, like Docker, offer significant advantages for DevOps. Docker images package applications and their dependencies, enabling portability and consistency across different environments. This simplifies deployment and reduces potential compatibility issues.

- Portability: Docker containers run consistently across various environments, including development, testing, and production, ensuring consistent application behavior.

- Isolation: Containers isolate applications, preventing conflicts between different applications or services, thereby enhancing system stability.

- Efficiency: Docker containers share the host operating system’s kernel, minimizing resource consumption and accelerating deployment.

Importance of Cloud Platforms in DevOps

Cloud platforms provide a foundation for DevOps practices. They offer scalable infrastructure, enabling teams to adapt to fluctuating demands. Cloud-based tools facilitate automation, collaboration, and monitoring, which are central to DevOps principles.

- Scalability: Cloud platforms offer the ability to quickly scale resources up or down based on demand, providing flexibility and responsiveness.

- Cost-Effectiveness: Cloud services often offer pay-as-you-go models, potentially reducing upfront investment costs.

- Accessibility: Cloud platforms offer global access to resources, enabling teams to work remotely and collaborate effectively.

DevOps Tools Categorization

The following table categorizes common DevOps tools:

| Tool Category | Examples | Purpose |

|---|---|---|

| Version Control | Git, SVN | Track code changes, manage revisions |

| Configuration Management | Ansible, Puppet, Chef | Automate infrastructure provisioning and management |

| Containerization | Docker, Kubernetes | Package and deploy applications in containers |

| CI/CD | Jenkins, GitLab CI/CD, CircleCI | Automate software delivery pipelines |

| Monitoring & Logging | Prometheus, ELK Stack, Datadog | Track application performance, analyze logs |

| Cloud Platforms | AWS, Azure, GCP | Provide infrastructure, services, and tools for DevOps |

Epilogue

In conclusion, mastering DevOps best practices empowers teams to deliver high-quality software more efficiently and reliably. By embracing automation, collaboration, and a culture of continuous improvement, organizations can achieve significant gains in speed, quality, and overall performance. This guide provides a solid foundation for understanding and implementing these vital practices.

Common Queries

What are some common challenges in implementing DevOps best practices?

Implementing DevOps best practices can be challenging due to organizational resistance to change, the need for cultural shifts, and the complexity of integrating various tools and technologies. Overcoming these challenges requires strong leadership, clear communication, and a commitment to continuous improvement.

How can I measure the success of my DevOps implementation?

Success in DevOps implementation is often measured by key performance indicators (KPIs) such as deployment frequency, lead time for changes, time to restore service, change failure rate, and customer satisfaction. Regularly monitoring and analyzing these metrics provides valuable insights for continuous improvement.

What are some common security vulnerabilities in DevOps pipelines?

Common security vulnerabilities in DevOps pipelines include insecure configurations, lack of access controls, inadequate logging and monitoring, and vulnerabilities in the codebase itself. Proactive security measures throughout the DevOps lifecycle are crucial for mitigating these risks.

What are the key benefits of using Infrastructure as Code (IaC)?

Infrastructure as Code (IaC) provides numerous benefits, including increased efficiency through automation, improved consistency, reduced errors, and enhanced collaboration. IaC streamlines infrastructure management and reduces the time needed to provision and manage resources.